Ex. 3.2

Ex. 3.2

Given data on two variables \(X\) and \(Y\), consider fitting a cubic polynomial regression model \(f(X)=\sum_{j=0}^3\beta_jX^j\). In addition to plotting the fitted curve, you would like a 95% confidence band about the curve. Consider the following two approaches:

(a)

At each point \(x_0\), form a 95% confidence interval for the linear function \(a^T\beta=\sum_{j=0}^3\beta_jx_0^j\).

(b)

Form a 95% confidence set for \(\beta\) as in (3.15), which in turn generates confidence intervals for \(f(x_0)\).

How do these approaches differ? Which band is likely to be wider? Conduct a small simulation experiment to compare the two methods.

Soln. 3.2

For the first method, at each point \(\textbf{x}_0\), the variance of \(\hat y_0 = \textbf{x}_0\hat\beta\) is

Then at each sample \((\textbf{x}_0, y_0)\), the confidence interval is calculated as

For the second method, denote \(\beta\) as the true value of coefficients, we have

The confidence region for \(\hat\beta\) is found by inverting the inequality above with respect to \(\hat\beta\). To do that, we first make Cholesky decomposition of \(\textbf{X}^T\textbf{X}=\textbf{U}^T\textbf{U}\) where \(\textbf{U}\) is upper triangular. Therefore, for vectors \(\hat\beta\) at the boundary of the confidence region, \(\textbf{U}(\hat\beta-\beta)\) lies on the 4-dimensional ball with radius \(r = \sigma \sqrt{\chi^2_{4, 0.05}}\). Hence for any vector \(\gamma\in\mathbb{R}^4\), we can first normalize it to the ball with radius \(r\) and the arrive \(\hat\beta\) by

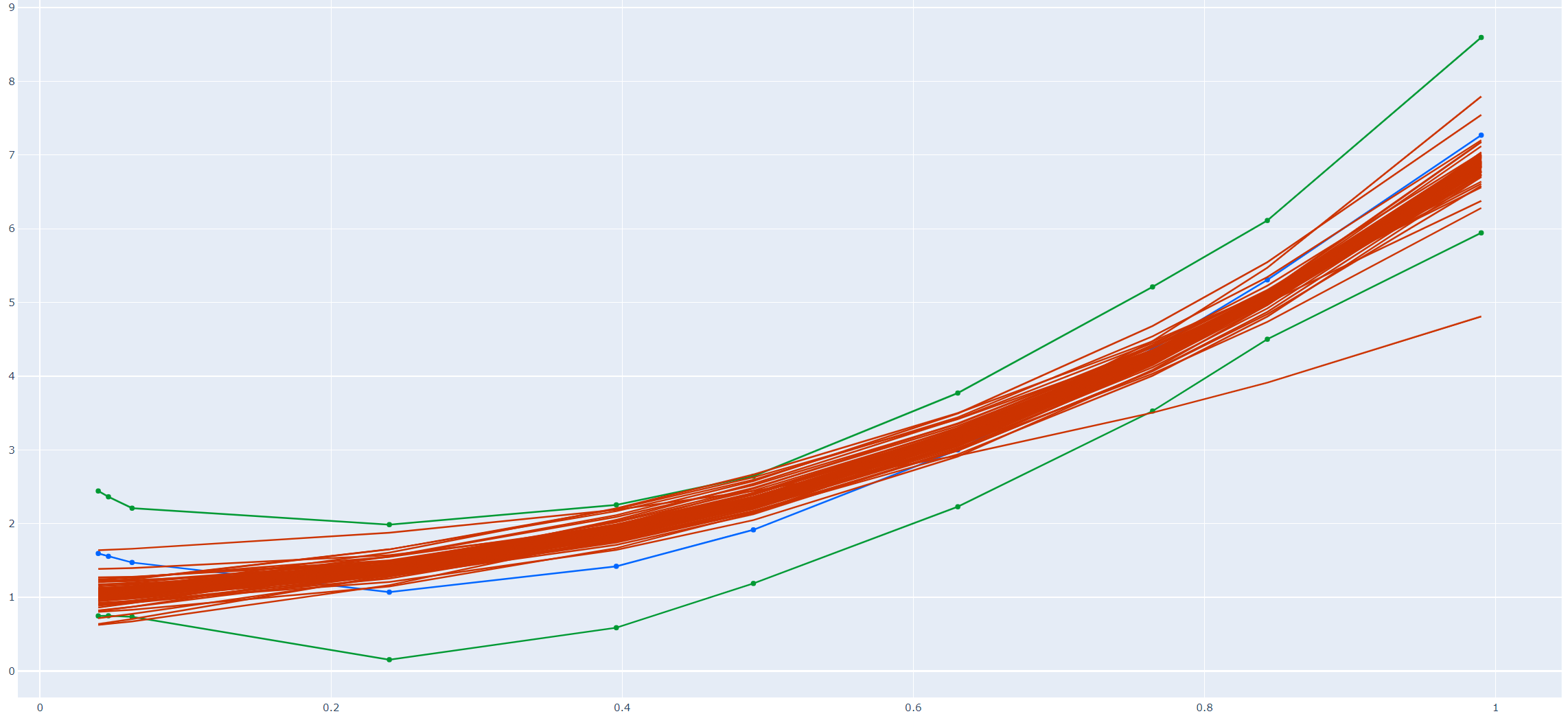

Note that the confidence region from the first method is expected to be wider than that from the second method. The reason is that the region from the first method is a simultaneous confidence region, that is, each of the coordinate \(\beta_i\) falls into the region with the given probability. While the one from the second is an elliptical confidence region, it's less strict than the simultaneous one thus it's narrower. This is also observed from Figure 1 below.

Figure 1 is drawn from a numerical simulation experiment. In the simulation we first draw 10 values, \(\{x_i, i=1,...,10\}\) ,uniformly from \([0,1]\). Then we generate \(y_i = 1 + x_i + 2x_i^2 + 3x_i^3 + \epsilon_i\) with \(\epsilon_i\sim N(0, 0.5)\). That is, the true value for \(\beta\) is \(\beta = (1,1,2,3)^T\in \mathbb{R}^4\).

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 | |

We then calculate confidence regions following the procedures described above.

1 2 3 4 5 6 7 8 9 10 11 | |

Note that in the second method, we sample 100 different vectors \(\gamma\) and thus obtain 100 different \(\hat\beta\) (of course, finally 100 different `curves' via \(\textbf{X}\hat\beta\)).

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 | |

The blue line is calculated from \(\hat\beta^{\text{OLS}}\), the two green lines are the 95% confidence region band from the first method, and the red lines are sampled from the boundary of the 95% confidence set from the second method. Note that most red ones lie in the confidence region formed by the green boundaries, however, they have the freedom to jump out.

1 2 3 4 5 6 7 8 9 10 11 12 | |

Code

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 | |