Ex. 10.5

Ex. 10.5

Multiclass exponential loss (Multi-class adaboost). For a \(K\)-class classification problem, consider the coding \(Y=(Y_1,...,Y_K)^T\) with

Let \(f=(f_1,...,f_K)^T\) with \(\sum_{k=1}^Kf_k=0\), and define

(a)

Using Lagrange multipliers, derive the population minimizer \(f^\ast\) of \(L(Y, f)\), subject to the zero-sum constraint, and relate these to the class probabilities.

(b)

Show that a multiclass boosting using this loss function leads to a reweighting algorithm similar to AdaBoost, as in Section 10.4.

Soln. 10.5

(a)

We follow the similar arguments in Ex. 10.2. We're interested in

subject to

The Lagrange multiplier, denoted as \(H(f_1,...,f_k,\lambda)\), becomes

Note that \(f_1(x) + ... + f_K(x) = 0\), so \(\eqref{eq:10.5multi}\) simplifies to

For \(k=1, ..., K\), setting \(\frac{\partial H}{\partial f_k(x)} = 0\) and \(\frac{\partial H}{\partial \lambda} = 0\) yields

Note that we have \(K+1\) equations with \(K+1\) unknowns. We first represent \(f_k(x)\) in terms of \(\lambda\) by the first set of equations above:

Then by \(\sum_{k=1}^Kf^\ast_k(x)=0\) we have

so that

Plug \(\lambda\) above back to \(\eqref{eq:fkx}\) we obtain that for \(k = 1,...,K\),

Once \(f^\ast_k(x)\) are estimated for \(k=1,...,K\), we are able to estimate \(P(Y=k|x)\) in terms of \(f^\ast_k(x)\). From \(\eqref{eq:fkx}\) and \(\eqref{eq:10lambda}\), we obtain for \(k=1,...,K\),

Summing \(P(Y=k|x)\) for \(k=1,...,K\) we obtain

thus

Plugging equation above back to \(\eqref{eq:pyk}\) we get

(b)

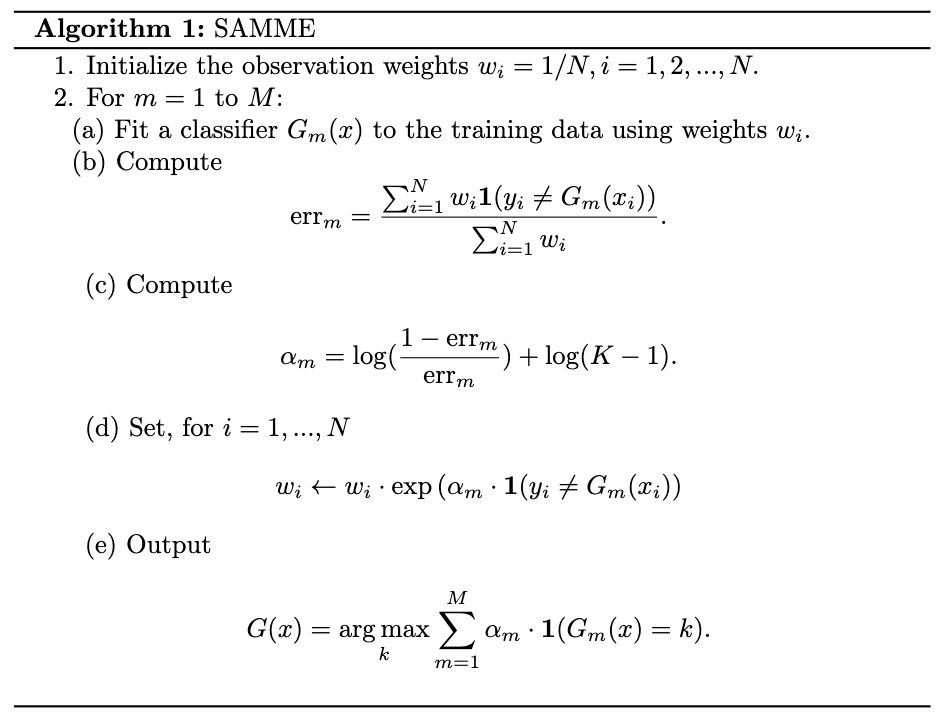

Following the idea of two-class AdaBoost, we start with a Stagewise Additive Modeling using a Multi-class Exponential loss function (SAMME).

Note that SAMME shares the same simple modular structure of AdaBoost with a simple but subtle different in (c), specifically, the extra term \(\log(K-1)\).

The link between exponential loss function and SAMME follows the same arguments in Section 10.4. Specifically, we have as (10.12),